How to Create Unit Tests in Minutes and Without AI Slop

Writing unit tests is expensive. Not just in time, but in technical debt.

Every test you write is code you must maintain. When you refactor, you refactor twice: once for production code, once for test code. When dependencies change, you update mocks. When APIs evolve, you rewrite assertions.

What if there was a different way? What if your tests could be generated from reality itself?

The Problem with Traditional Unit Testing Automation

Modern test automation has a hidden cost. Tools like Cypress, Playwright, and Selenium require QA engineers to be programmers. Even if AI writes the initial code, someone must review it, maintain it, version it, and fix it after every refactoring. The test framework becomes a "second product" that consumes resources.

Sure, you can use AI to generate hundreds of tests in seconds. But then what? You're stuck debugging flaky tests, fixing brittle assertions, and updating mocks every time your API changes. The AI slop might look impressive initially, but it becomes technical debt the moment your codebase evolves.

Even worse, traditional tests operate in a vacuum. They mock databases, simulate services, and guess at edge cases. When they fail, you're left hunting through logs, trying to correlate timestamps across multiple services, hoping to reconstruct what happened.

BitDive takes a different approach: there's no test code to write or maintain. Everything happens under the hood. You never touch the test logic.

A Different Approach: Capture & Replay

BitDive replaces manual test writing with a Capture & Replay process that creates robust unit and integration tests:

- Capture: Record the actual execution of key scenarios inside your service (from production or test environments).

- Replay: Use these recordings as a regression baseline, automatically replaying them on every build.

The key difference? BitDive doesn't use AI to guess what your code should do. It records what it actually did and verifies that behavior remains consistent.

How It Works: Automated Spring Boot Unit Tests

Let's walk through the process using a real demo application.

The Setup for Automated Unit Tests

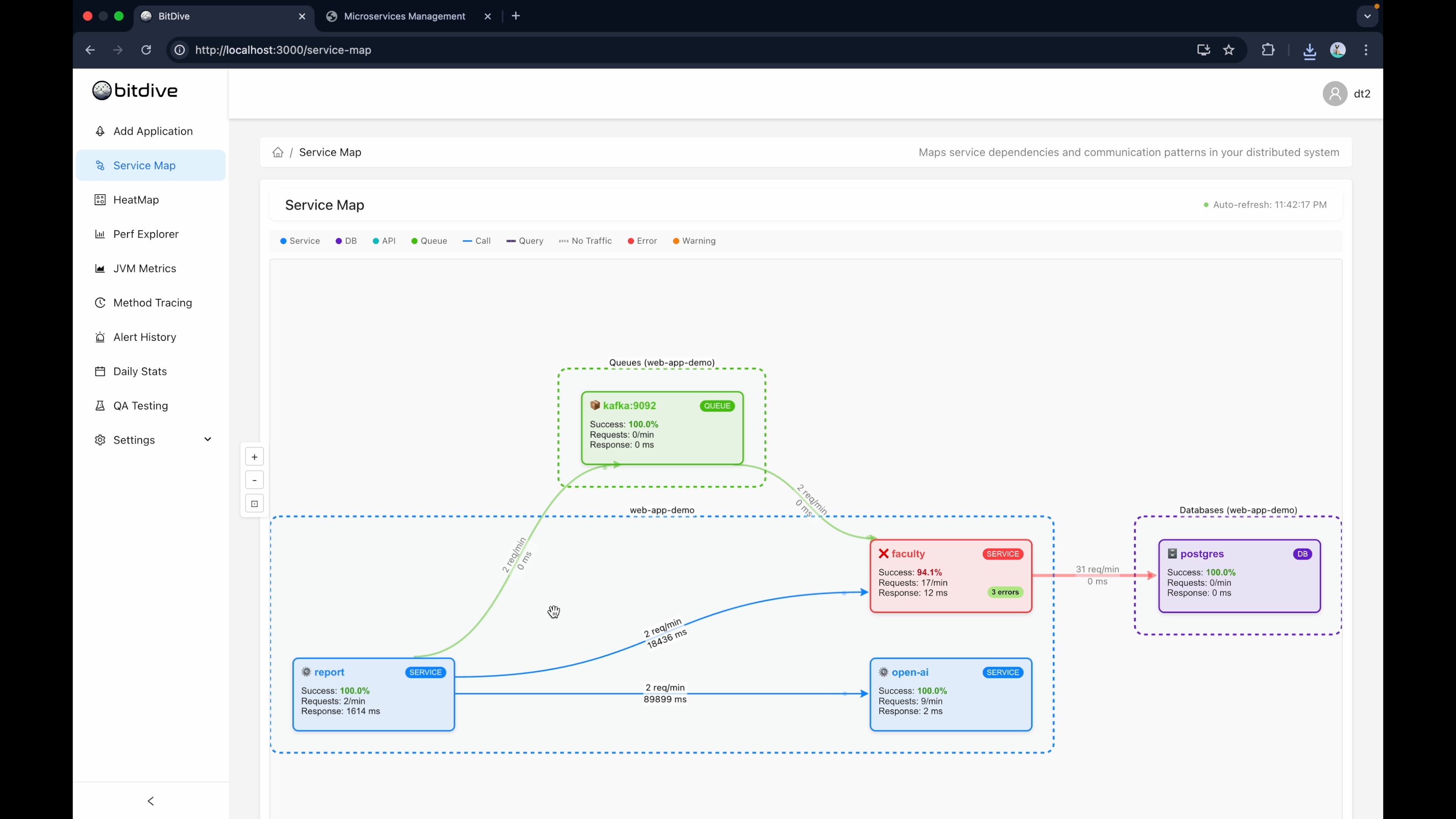

The process is simple: use your application naturally, and BitDive captures everything. In our walkthrough video, we use a sample Spring Boot microservices application (Faculty, Report, and OpenAI services with Kafka and PostgreSQL), but the approach works for any application.

Step 1: Capture Execution

Open your application and perform typical operations. BitDive runs in the background, capturing the full execution context (perfect for API unit testing): method calls, SQL queries, dependency interactions.

After using the application, open the BitDive workspace. You'll see all microservices that participated in your actions, along with infrastructure dependencies like databases and message brokers.

Step 2: Debug Failures (Optional)

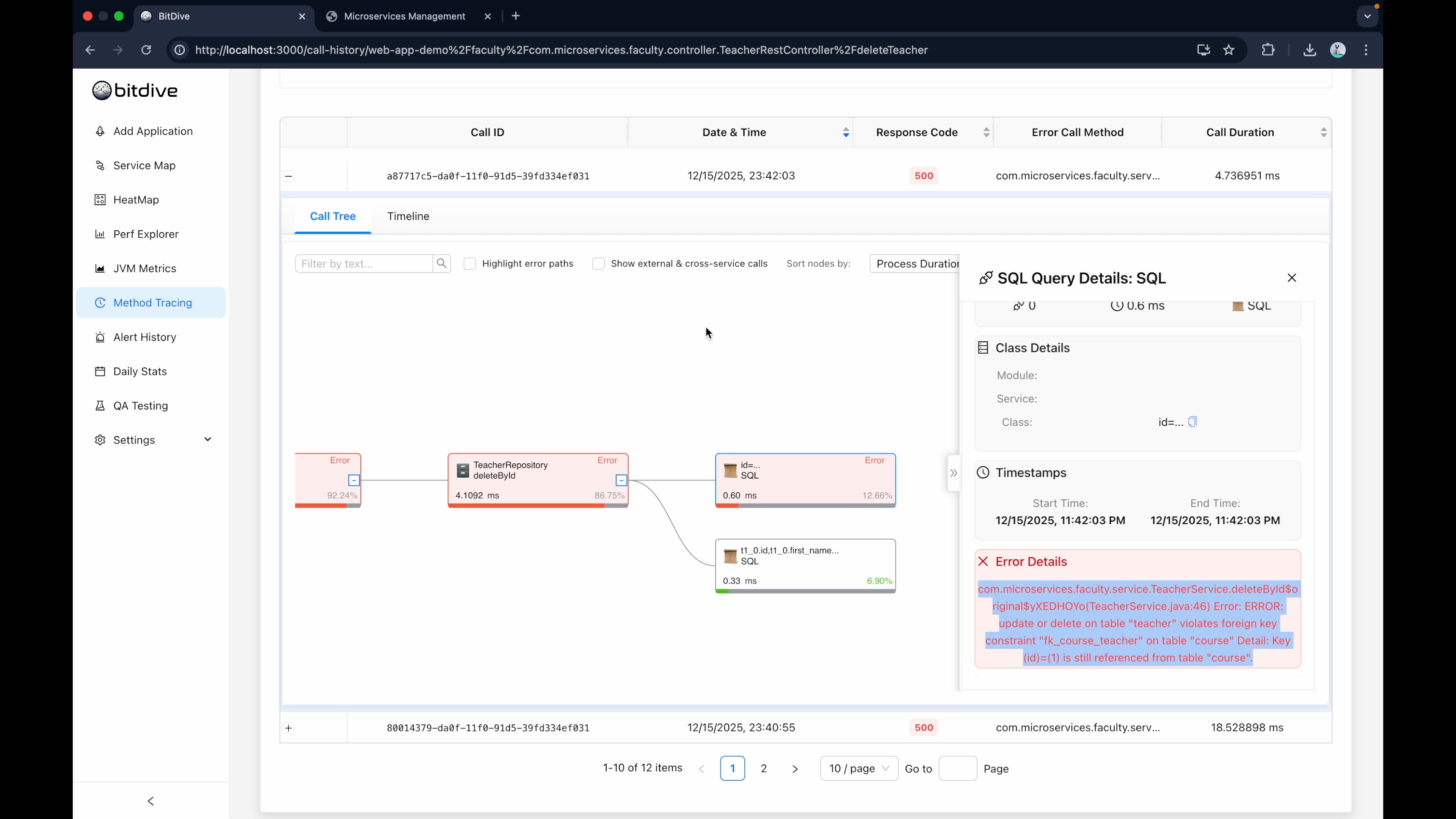

When something fails, you get immediate debugging insight. For example, if an operation returns a 500 error, open that call in the HeatMap and drill down to the exact method and SQL query that caused it.

This debugging capability is valuable on its own, but the real power comes when you turn successful operations into regression tests.

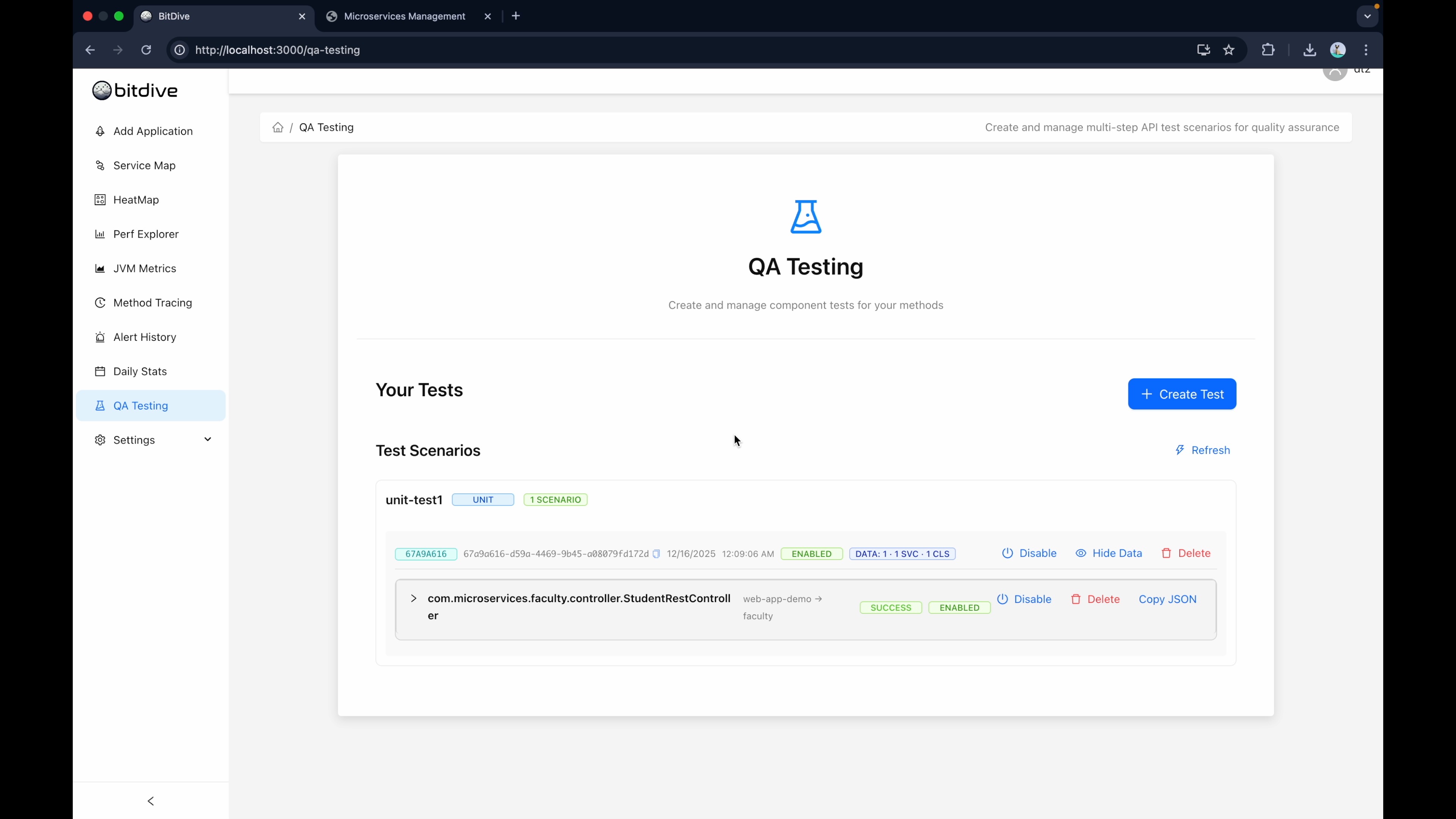

Step 3: Select Operations for Unit Testing

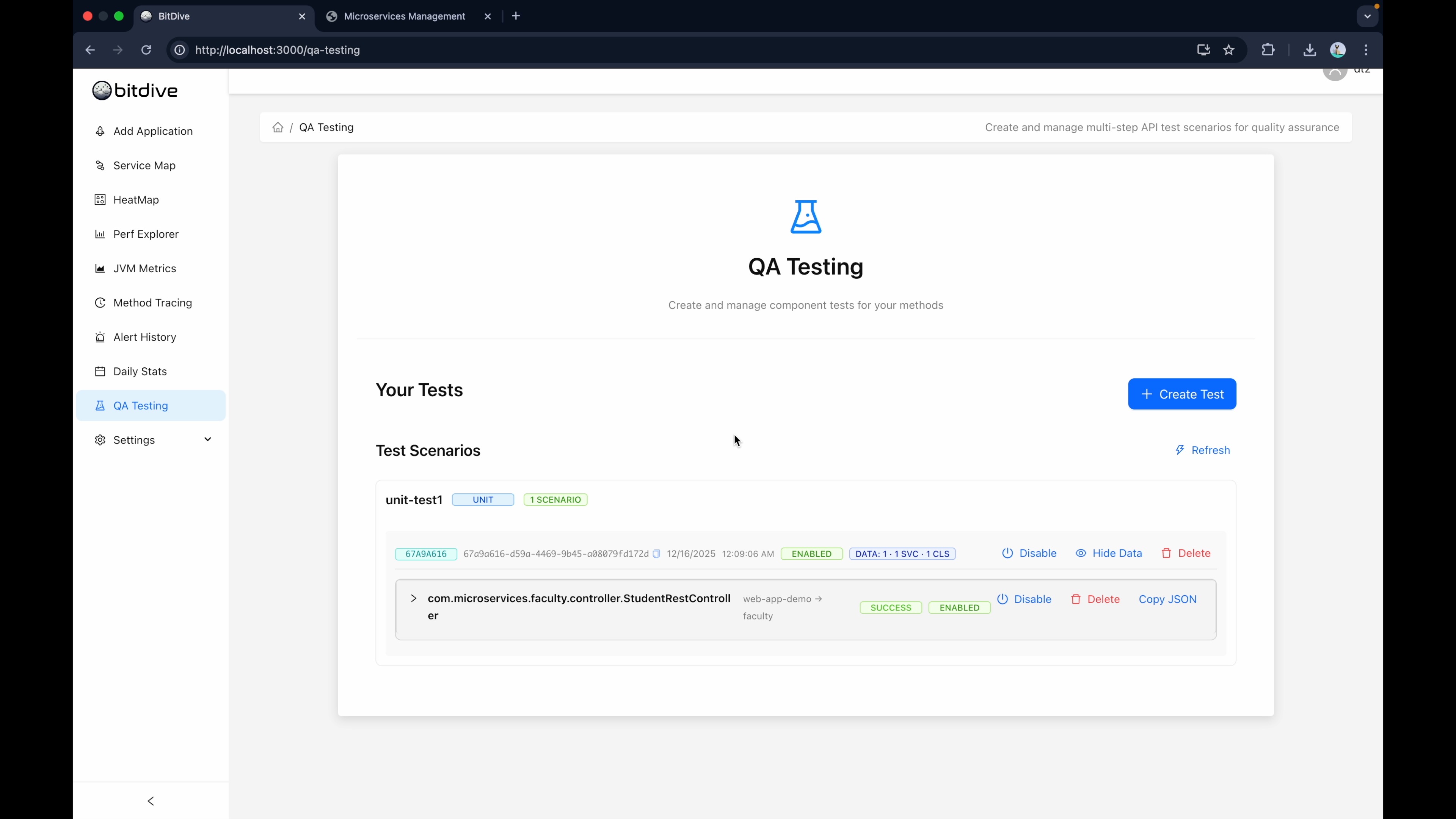

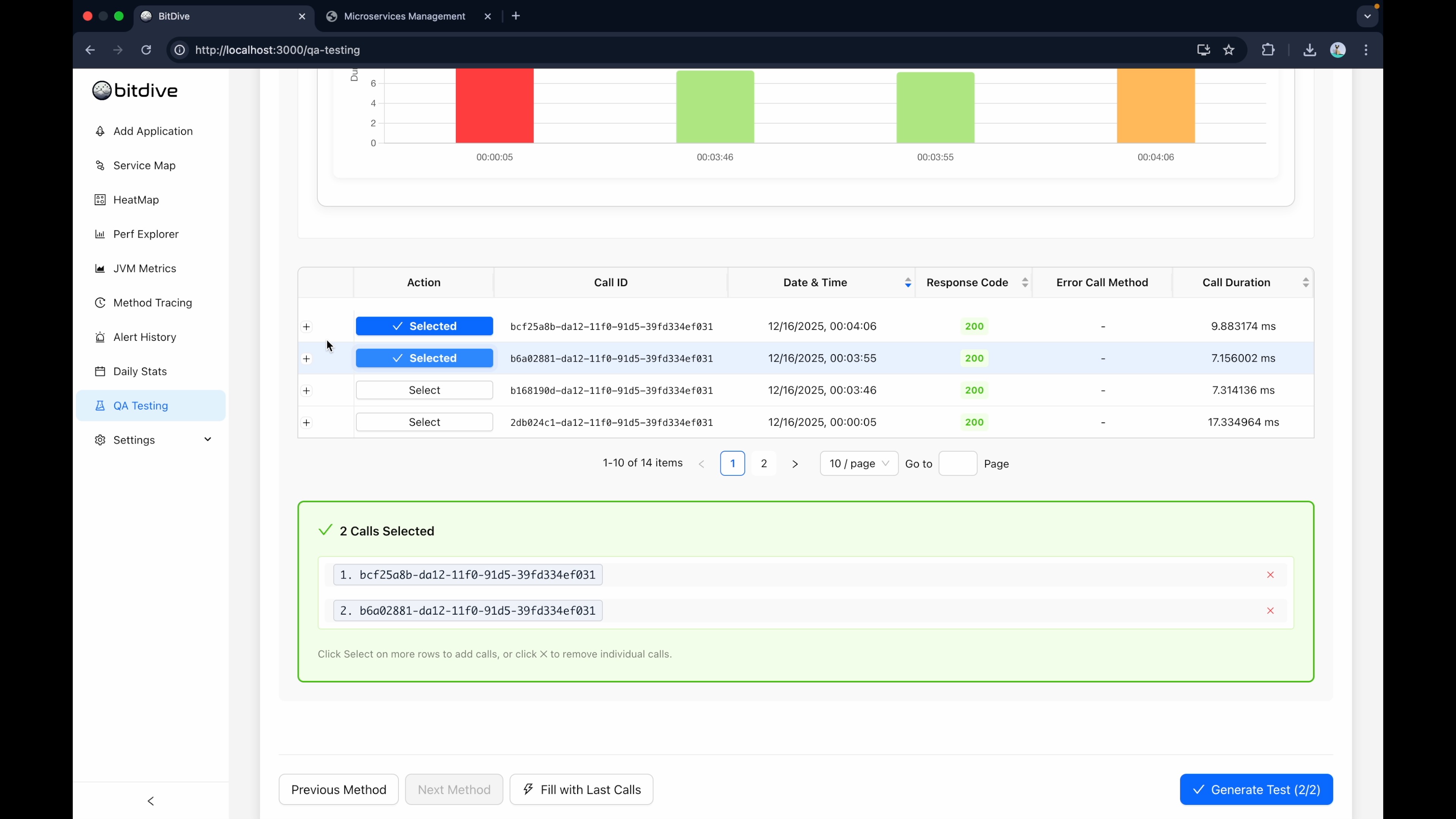

Go to the Testing tab and create a new test. Select the operations you want to preserve as regression tests. Complex calls like "GetStudentsForCourseReport" make excellent candidates because they exercise deep call chains and multiple dependencies.

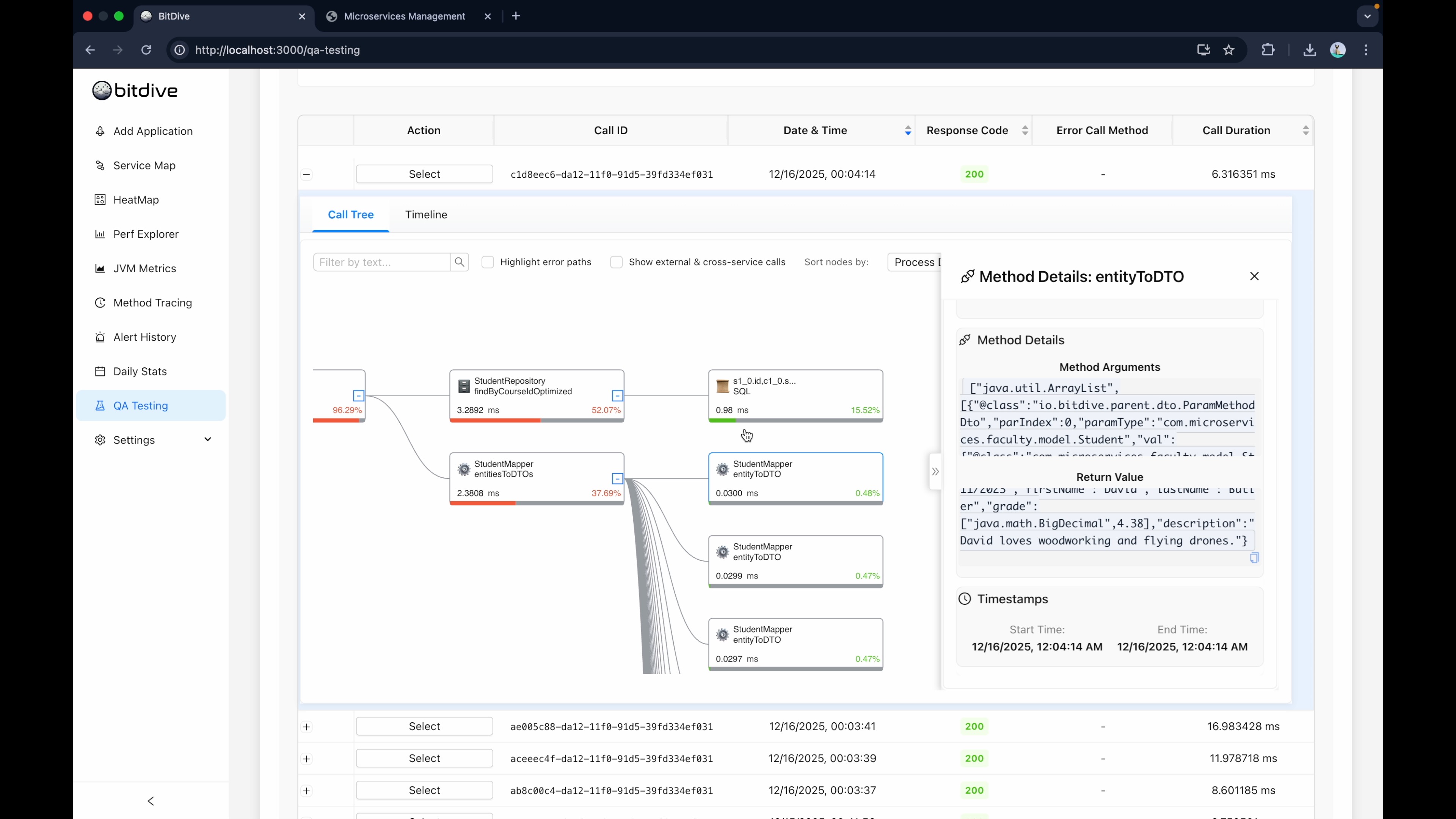

Step 4: Inspect Execution Details

For each operation, choose the specific recorded trace. You can inspect it in detail: input arguments, return values, DTOs passed between services, and SQL queries executed against the database.

This transparency lets you verify you're capturing the right baseline behavior before turning it into a test.

Step 5: Generate the Unit Test

Once you've selected your traces, click "Generate Test". BitDive packages the recorded execution into a replay plan with exact inputs, exact outputs, and exact dependency interactions.

Crucially, BitDive automatically virtualizes the environment. When the code tries to hit the database during replay, BitDive intercepts and returns the recorded response. No manual Mockito unit testing required.

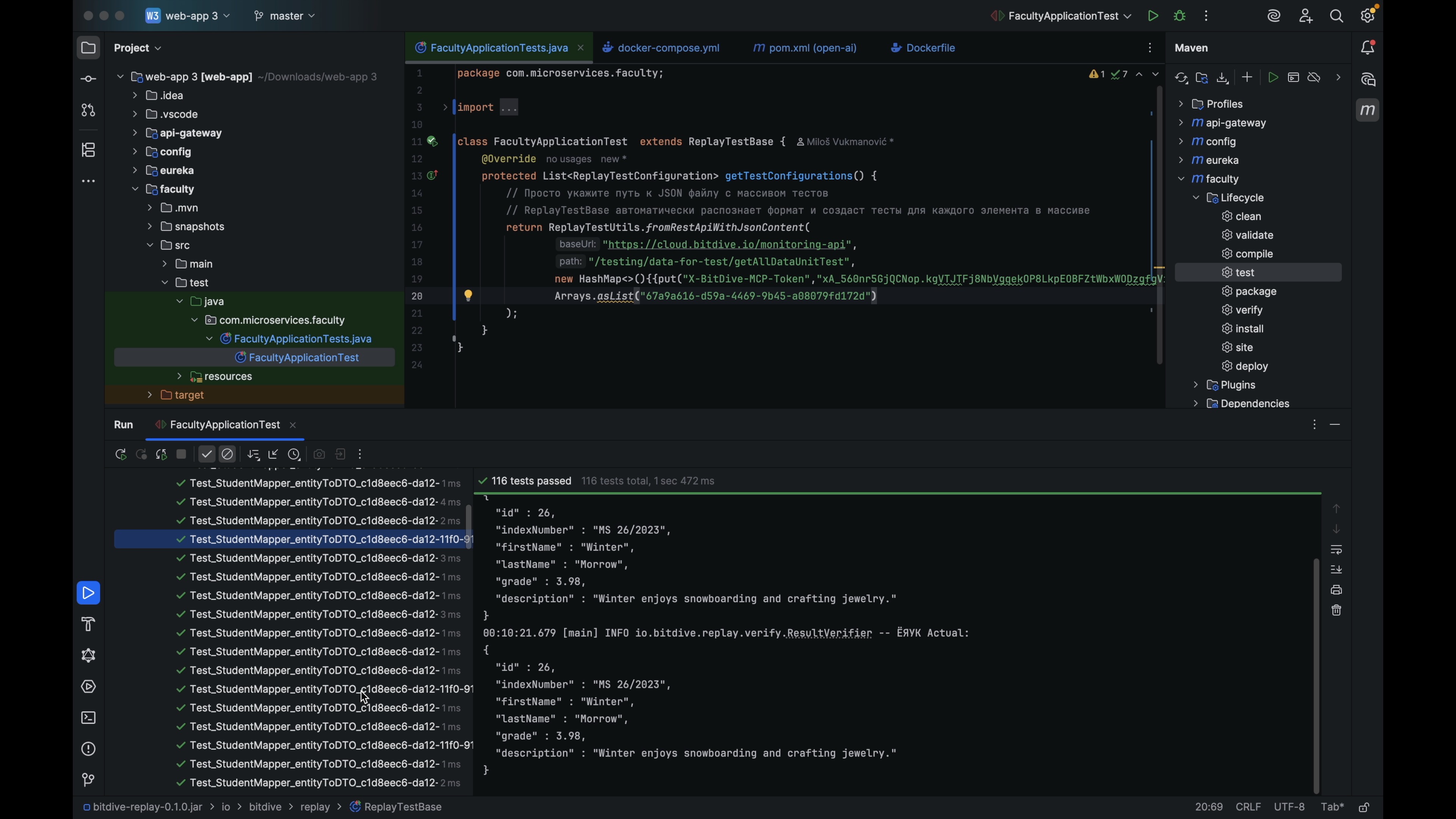

Step 6: Run as Standard Java Unit Tests

Copy the test identifier and paste it into your IDE's JUnit configuration. Then run:

mvn test

A single complex operation might generate many individual validation checks because BitDive verifies behavior across the entire call chain, method by method.

Step 7: Review Unit Test Results

To see detailed validation, run the test in your IDE. You can walk through each method and see what was expected versus what actually came back.

Strategic Advantages of Zero-Code Unit Testing

This approach delivers benefits that go beyond just "less code to write":

1. Zero-Code Means Zero Debt

Your test coverage grows based on product usage, not test code. You can achieve high coverage without managing a massive repository of test scripts, fitting perfectly into modern unit, integration, and system testing strategies. When logic changes legitimately, you capture new behavior instead of rewriting code.

2. White Box Visibility Without Logs

Traditional tests check HTTP status codes. BitDive validates the internal execution path: same SQL queries, same method calls, same dependency interactions. When a test fails, you don't hunt through logs. You see the exact divergence point.

This creates a "shared reality" between QA and developers. A failing test includes a link to the exact trace showing where behavior changed.

3. Flexible Verification Modes

BitDive offers two replay modes:

- Virtualization Mode: Instant validation by mocking all dependencies in-memory (seconds)

- Testcontainers Mode: Full integration testing with real databases and queues (minutes), bridging unit and integration testing.

You choose the trade-off between speed and depth per test.

Ready to Try Zero-Code Testing?

Follow our step-by-step documentation to set up your first replay test in minutes.

Read Documentation4. Protection Against Semantic Drift

Standard tests verify "200 OK". BitDive validates the entire execution path: which methods were called, in what order, with what parameters, and which SQL queries ran.

If a developer refactors the code and accidentally changes the query logic (for example, removing a join or altering a WHERE condition), the test will fail even if the HTTP response looks correct. These are the subtle regressions that slip past status-code tests and cause production incidents weeks later.

The Bottom Line

Writing unit tests shouldn't require writing unit test code. Your application's real behavior is the gold standard. Capture it. Replay it. Validate it. That's the essence of zero-code unit testing.